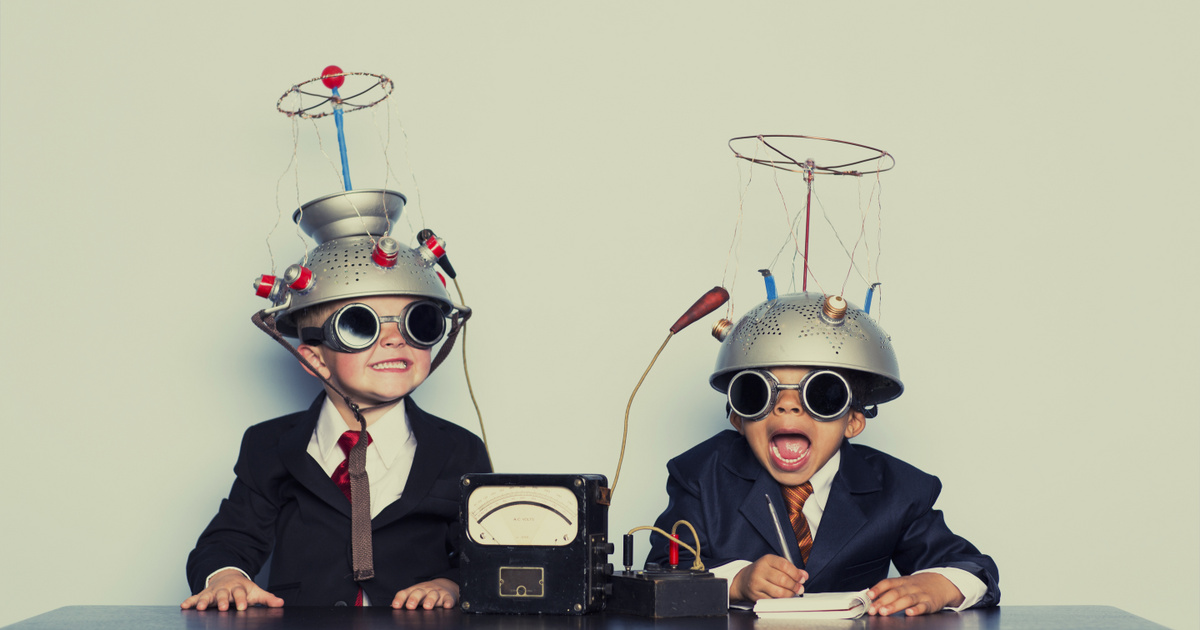

Employees of the Faculty of Information Technology at Cornell University concluded that text generators based on artificial intelligence and smart services that help write electronic messages, which complement the started sentence, help not only to write, but also to copy the author’s thoughts unnoticed.

Doctoral student Maurice Jaksch engaged 1,500 volunteers in the study, in which participants were asked to write a paragraph on the question “Is social media good for society?”

Curious about the relationship between human thinking and intelligent machines, Jakesch created a biased writing support program that could be used through an interface that looked like a social media platform. Record the behavior of the people typing, which suggested phrasings they keep or ignore, and how much time they spend phrasing.

Those who drafted with the help of the machine wrote paragraphs consisting of several sentences, but above all, compared to the opinion of those who drafted from their own heads, twice as many leaned towards the position suggested by the machine.

We are trying to apply AI models to all areas of life, but we must better understand what this might entail. In addition to increasing efficiency and creativity, language and public opinion can also have consequences for the individual and society.

– noted one of the authors of the research, Mor Nauman.

A new dimension of influence

It occurred to the researchers that people may want to get the job done quickly and are therefore more likely to accept the opportunities presented. When analyzing the time spent on the task, they found that those who spent the most time writing also reflected the machine’s opinion.

The questions also revealed that the participants were, of course, unaware that the machine had influenced their opinions. When the experiment was repeated by changing the subject of the writing task, the same affective effect was measured.

While writing, don’t feel like they’re trying to impress you. It feels so natural and organic – expressing my thoughts with a little bit of support

Numan said.

So far, only measurements have been made of the persuasiveness of ads or motivational materials generated by ChatGPT – they mainly track how well they can keep up with the quality of human products and, as is known, quite a lot today. Research presented at a conference in April examined a more direct effect. So far, the behavior manipulation known as alerting has come close to that, which has evolved into a toxic design technique known as dark patterns on the internet with its inevitable buttons and unstoppable ads.

Naumann, on the other hand, calls latent or unconscious persuasion,

What the machine can do in terms of help and support. Everything points to the fact that in a very short period of time, artificial intelligence has made great progress in reading and writing human thoughts.

The spread of social media has completely reshaped political life by spreading misinformation and creating opinion bubbles. The advent of artificial intelligence could bring about similar changes. For example, New Zealand data scientist David Rosado analyzed ChatGPT responses and found that the responses showed signs of “liberal, progressive, and democratic” bias, so he set out to create a right-wing-conservative AI called RightWingGPT.

Researchers are now studying how user opinion changes and how long this effect lasts. In their view, the primary defense against the influence of AI is for as many people as possible to realize what the technology is capable of. On the other hand, bias, prejudice and transparent process can be held accountable on the basis of formal regulations and laws.

(Google)