When there is no doubt about the completeness of available information, people generally assume that they make decisions with sufficient information, according to research by John Gehlbach, Carly Robinson, and Angus Fletcher. But the good news, according to experts, is that the majority is ready to change their mind after knowing the full picture.

Collaborators from Johns Hopkins, Stanford, and Ohio State University used a simple experiment to examine opinions about decisions: Starting with a scenario, they examined participants' attitudes based on different information.

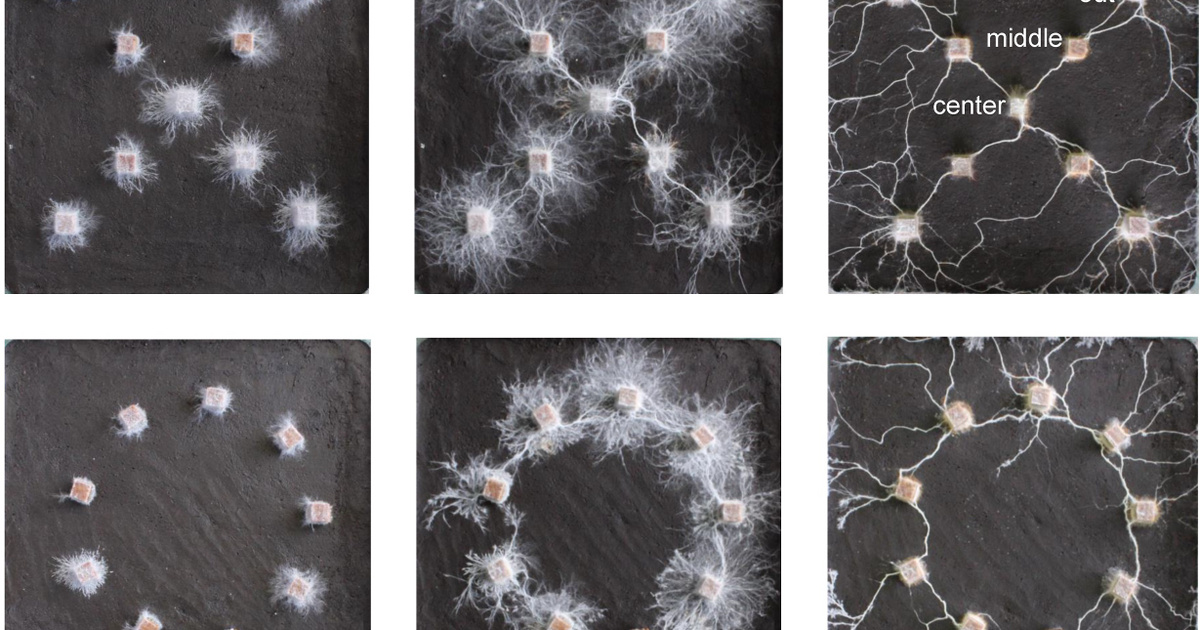

The scenario was about a school district that has to make the decision to close a school and consolidate it with other schools due to water shortages. The story description included seven important pieces of information: three were against the closure, three were in favor of it, and there was one neutral detail. The control group received all the information and was divided equally about solving the problem. Other participants in the experiment were divided into several groups and received articles that treated the story in a different way, in which facts supporting one outcome or another were left out, as well as neutral information.

Each group was divided into two parts. Someone was immediately asked how they would decide and how they thought the public would accept this decision. Meanwhile, the other part of them received the article with deleted information that they had never seen before.

The members of the first team, like the control group, overwhelmingly believed that they had made a good decision and that the public would agree with them, but the decisions were very different. Less than a quarter of those who read the facts against school closure decided to close it. In contrast, after seeing biased information supporting the closure, 90% supported this decision with full confidence.

Please don't be unsafe!

As mentioned previously, the second subset of teams faced a different situation instead of evaluating the decision: they received a second essay biased toward the other solution. The balance of information in their case was the same as for the control group that received complete information, with the difference that they experienced two biases, but not at the same time, but with a certain delay. Similar to the control group, decisions showed an equal distribution, but low confidence in audience response expectations was evident.

In the latter case, Gehlbach, Robinson, and Fletcher's hypothesis was that people would adhere to the bias suggested by the first article. Instead, participants acted as if they had been given all the information from the beginning.

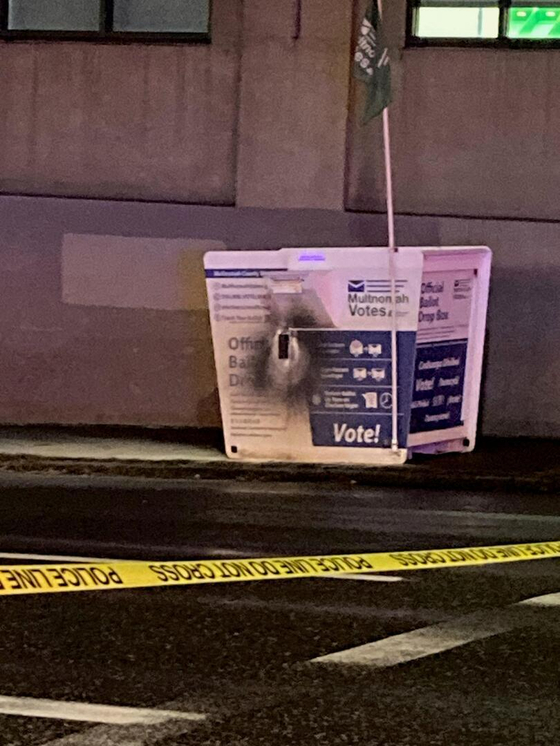

The result naturally raises the risk of misinformation being spread through the media: people rarely seem to realize that the information provided to them is incomplete. At the same time, its downside is also obvious: mostly learned bias

It continues only until balanced information is obtained.

This phenomenon appears to be a process reminiscent of the Dunning-Kruger effect: that is, the less we know about a particular question or topic, the more we tend to overestimate our knowledge. More precisely, the dumber a person is, the more confident he is.