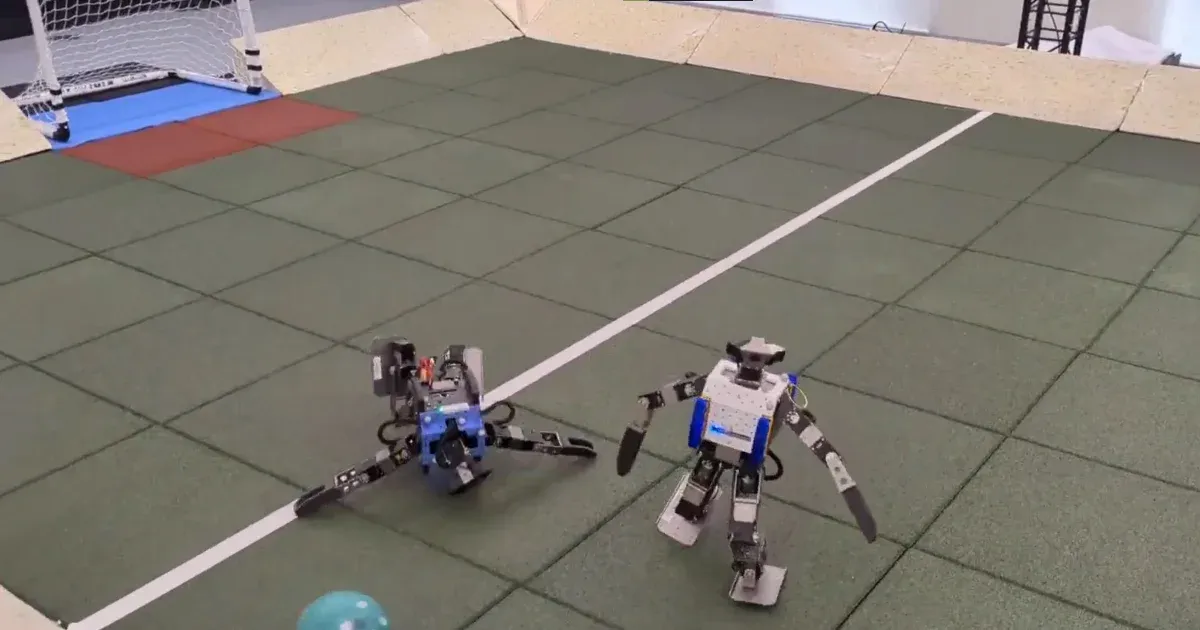

Google has used artificial intelligence to teach some robots to play soccer. Researchers from DeepMind, part of parent company Alphabet Scientific robotics In the magazine, they presented how to develop motor skills for bipedal machines.

In the video shown, two robots are playing against each other. More precisely, they swing their arms and run after the ball, dribbling, blocking, lying down and getting up. The last two forms of movement are performed relatively often, but with frightening speed.

One difficulty in programming robots is that all movements must be defined in advance. But thanks to AI, DeepMind has taught robots how to adapt to situations more autonomously with the help of several AI teaching methods.

This method is called deep reinforcement learning, and one of its essence is that the machine receives a virtual reward when it completes a task. First, they pre-fed the basics of necessary skills, and then added deep reinforcement learning. Thanks to this, their robots were 181% faster, turned 302% faster, kicked 34% faster, and took 63% less time to get up after a fall compared to robots that were not trained in this technology.

In this way, the robots also tried things that researchers had never thought to program. For example, the fact that they rotated with difficulty on one corner of their sole, which according to the authors was difficult to pre-program, or that they ran in a pattern while defending caused further disturbance to the attacking robot.

The purpose of the whole thing isn't necessarily to get the next generation of athletes off the assembly line, but to figure out how to teach robots fine motor skills relatively quickly, which could be particularly useful in many industries.