[{“available”:true,”c_guid”:”72320e38-810b-4e46-b5c4-f46711ed2748″,”c_author”:”Bankmonitor”,”category”:”kkv”,”description”:”A Széchenyi Kártya Programot irányító Kavosz Zrt. közzétette, hogy mely pénzintézetek kezdték meg a Széchenyi Kártya Program MAX+ konstrukciókra vonatkozó hitelkérelmek befogadását. A Bankmonitor.hu összegyűjtötte a vállalkozások jelenlegi lehetőségeit.”,”shortLead”:”A Széchenyi Kártya Programot irányító Kavosz Zrt. közzétette, hogy mely pénzintézetek kezdték meg a Széchenyi Kártya…”,”id”:”20230111_Kiderult_mely_a_bankoknal_igenyelhetok_elsokent_a_Szechenyi_Kartya_Program_hitelei”,”image”:”https://api.hvg.hu/Img/ffdb5e3a-e632-4abc-b367-3d9b3bb5573b/72320e38-810b-4e46-b5c4-f46711ed2748.jpg”,”index”:0,”item”:”9eddd422-1407-45c1-bbac-7a30296e494a”,”keywords”:null,”link”:”/kkv/20230111_Kiderult_mely_a_bankoknal_igenyelhetok_elsokent_a_Szechenyi_Kartya_Program_hitelei”,”timestamp”:”2023. január. 11. 12:45″,”title”:”Kiderült, mely bankoknál igényelhetők elsőként a Széchenyi Kártya Program hitelei”,”trackingCode”:”RELATED”,”c_isbrandchannel”:false,”c_isbrandcontent”:false,”c_isbrandstory”:false,”c_isbrandcontentorbrandstory”:false,”c_isbranded”:false,”c_ishvg360article”:false,”c_partnername”:null,”c_partnerlogo”:”00000000-0000-0000-0000-000000000000″,”c_partnertag”:null},{“available”:true,”c_guid”:”4aa65743-7d73-417d-b6ec-defcaf985008″,”c_author”:”hvg.hu”,”category”:”itthon”,”description”:”Azt nem tudni, hogy a fiatal és a barátja miért kapaszkodott fel a tartálykocsira.”,”shortLead”:”Azt nem tudni, hogy a fiatal és a barátja miért kapaszkodott fel a tartálykocsira.”,”id”:”20230110_egesi_serules_eletveszely_aramutes_vasutallomas_eger”,”image”:”https://api.hvg.hu/Img/ffdb5e3a-e632-4abc-b367-3d9b3bb5573b/4aa65743-7d73-417d-b6ec-defcaf985008.jpg”,”index”:0,”item”:”c4c89421-2baa-4bb9-86fd-7d9c9b7b190e”,”keywords”:null,”link”:”/itthon/20230110_egesi_serules_eletveszely_aramutes_vasutallomas_eger”,”timestamp”:”2023. január. 10. 19:44″,”title”:”A teste 80 százaléka megégett a fiúnak, aki felmászott egy vasúti kocsi tetejére Egerben”,”trackingCode”:”RELATED”,”c_isbrandchannel”:false,”c_isbrandcontent”:false,”c_isbrandstory”:false,”c_isbrandcontentorbrandstory”:false,”c_isbranded”:false,”c_ishvg360article”:false,”c_partnername”:null,”c_partnerlogo”:”00000000-0000-0000-0000-000000000000″,”c_partnertag”:null},{“available”:true,”c_guid”:”0f2e6192-dc81-4919-a83d-d13bda462af6″,”c_author”:”hvg.hu”,”category”:”elet”,”description”:”81 évesen hunyt el az egykori pápai főtanácsadó, akit kórista fiúk molesztálása miatt ítéltek el korábban.\r\n\r\n”,”shortLead”:”81 évesen hunyt el az egykori pápai főtanácsadó, akit kórista fiúk molesztálása miatt ítéltek el korábban.\r\n\r\n”,”id”:”20230111_meghalt_george_pell_pedofilia_miatt_elitelt_biboros”,”image”:”https://api.hvg.hu/Img/ffdb5e3a-e632-4abc-b367-3d9b3bb5573b/0f2e6192-dc81-4919-a83d-d13bda462af6.jpg”,”index”:0,”item”:”4ac5d701-d476-4f4e-9542-ee1dc796bdf5″,”keywords”:null,”link”:”/elet/20230111_meghalt_george_pell_pedofilia_miatt_elitelt_biboros”,”timestamp”:”2023. január. 11. 07:22″,”title”:”Meghalt George Pell bíboros, a legmagasabb rangú katolikus tisztviselő, akit pedofília miatt elítéltek”,”trackingCode”:”RELATED”,”c_isbrandchannel”:false,”c_isbrandcontent”:false,”c_isbrandstory”:false,”c_isbrandcontentorbrandstory”:false,”c_isbranded”:false,”c_ishvg360article”:false,”c_partnername”:null,”c_partnerlogo”:”00000000-0000-0000-0000-000000000000″,”c_partnertag”:null},{“available”:true,”c_guid”:”f482ab51-c1e0-4b9c-a7b5-4c1b3c3960f1″,”c_author”:”Révész Sándor”,”category”:”360″,”description”:”A több mint háromórás remekmű a szerző egyetlen keresztény oratóriuma.”,”shortLead”:”A több mint háromórás remekmű a szerző egyetlen keresztény oratóriuma.”,”id”:”202301_cd__a_helytarto_parancsara_hndel_theodora”,”image”:”https://api.hvg.hu/Img/ffdb5e3a-e632-4abc-b367-3d9b3bb5573b/f482ab51-c1e0-4b9c-a7b5-4c1b3c3960f1.jpg”,”index”:0,”item”:”669395a2-a155-490c-a9c7-688848b1f1ab”,”keywords”:null,”link”:”/360/202301_cd__a_helytarto_parancsara_hndel_theodora”,”timestamp”:”2023. január. 10. 12:00″,”title”:”A New York-i Metropolitan legkiválóbbjai énekelték lemezre George Friedrich Händel egyik fontos művét”,”trackingCode”:”RELATED”,”c_isbrandchannel”:false,”c_isbrandcontent”:false,”c_isbrandstory”:false,”c_isbrandcontentorbrandstory”:false,”c_isbranded”:false,”c_ishvg360article”:true,”c_partnername”:null,”c_partnerlogo”:”00000000-0000-0000-0000-000000000000″,”c_partnertag”:null},{“available”:true,”c_guid”:”a5c4dbdf-49c4-4071-a3bd-2402911f580e”,”c_author”:”hvg.hu”,”category”:”tudomany”,”description”:”A Microsoft kedden az utolsó szöget is beütötte a Windows 7, a Windows 8 és a Windows 8.1 koporsójába, így többé nem biztonságos ezeknek a használata.”,”shortLead”:”A Microsoft kedden az utolsó szöget is beütötte a Windows 7, a Windows 8 és a Windows 8.1 koporsójába, így többé nem…”,”id”:”20230111_microsoft_windows_frissites_tamogatas_leallitasa”,”image”:”https://api.hvg.hu/Img/ffdb5e3a-e632-4abc-b367-3d9b3bb5573b/a5c4dbdf-49c4-4071-a3bd-2402911f580e.jpg”,”index”:0,”item”:”7003cd8f-f231-403b-93bf-d0c467f3277c”,”keywords”:null,”link”:”/tudomany/20230111_microsoft_windows_frissites_tamogatas_leallitasa”,”timestamp”:”2023. január. 11. 11:03″,”title”:”Az ön gépén mi fut? Mától hivatalosan is veszélyes használni három Windows-verziót”,”trackingCode”:”RELATED”,”c_isbrandchannel”:false,”c_isbrandcontent”:false,”c_isbrandstory”:false,”c_isbrandcontentorbrandstory”:false,”c_isbranded”:false,”c_ishvg360article”:false,”c_partnername”:null,”c_partnerlogo”:”00000000-0000-0000-0000-000000000000″,”c_partnertag”:null},{“available”:true,”c_guid”:”d332989d-c6b2-4402-b98a-b2d6fdbd8db6″,”c_author”:”hvg.hu”,”category”:”cegauto”,”description”:”A Hennessey legfrissebb újdonsága a maximális leszorítóerőre és a legjobb köridőkre fókuszál.”,”shortLead”:”A Hennessey legfrissebb újdonsága a maximális leszorítóerőre és a legjobb köridőkre fókuszál.”,”id”:”20230110_zero_villany_1843_loeros_biturbo_v8_a_legujabb_amerikai_sporkocsiban_hennessey_venom_f5_revolution”,”image”:”https://api.hvg.hu/Img/ffdb5e3a-e632-4abc-b367-3d9b3bb5573b/d332989d-c6b2-4402-b98a-b2d6fdbd8db6.jpg”,”index”:0,”item”:”67b949f6-c121-4db7-9701-f931c011c2b3″,”keywords”:null,”link”:”/cegauto/20230110_zero_villany_1843_loeros_biturbo_v8_a_legujabb_amerikai_sporkocsiban_hennessey_venom_f5_revolution”,”timestamp”:”2023. január. 10. 07:59″,”title”:”Zéró villany: 1843 lóerős biturbó V8 a legújabb amerikai sporkocsiban”,”trackingCode”:”RELATED”,”c_isbrandchannel”:false,”c_isbrandcontent”:false,”c_isbrandstory”:false,”c_isbrandcontentorbrandstory”:false,”c_isbranded”:false,”c_ishvg360article”:false,”c_partnername”:null,”c_partnerlogo”:”00000000-0000-0000-0000-000000000000″,”c_partnertag”:null},{“available”:true,”c_guid”:”dfa514d6-6ee5-46d0-bf5d-30c34167f7df”,”c_author”:”HVG360″,”category”:”360″,”description”:”Az Egyesült Államok nélkül Ukrajna nem boldogulna az agresszorral szemben, ám Washington nem vonja le a legfőbb tanulságot az iraki és az afganisztáni kalandból, vagyis nem tűz ki egyértelmű célokat és nem dolgozza ki az eléréshez szükséges stratégiát, írja a Guardianben Frank Ledwidge. “,”shortLead”:”Az Egyesült Államok nélkül Ukrajna nem boldogulna az agresszorral szemben, ám Washington nem vonja le a legfőbb…”,”id”:”20230111_Brit_szakerto_Tul_sok_eletbe_kerulne_ha_az_USA_nem_donti_el_mi_a_gyozelem_Ukrajnaban”,”image”:”https://api.hvg.hu/Img/ffdb5e3a-e632-4abc-b367-3d9b3bb5573b/dfa514d6-6ee5-46d0-bf5d-30c34167f7df.jpg”,”index”:0,”item”:”23271eba-c00a-43af-9051-1c45ec9048ab”,”keywords”:null,”link”:”/360/20230111_Brit_szakerto_Tul_sok_eletbe_kerulne_ha_az_USA_nem_donti_el_mi_a_gyozelem_Ukrajnaban”,”timestamp”:”2023. január. 11. 15:30″,”title”:”Brit szakértő: Túl sok életbe kerülne, ha az USA nem dönti el, mi a \”győzelem\” Ukrajnában”,”trackingCode”:”RELATED”,”c_isbrandchannel”:false,”c_isbrandcontent”:false,”c_isbrandstory”:false,”c_isbrandcontentorbrandstory”:false,”c_isbranded”:false,”c_ishvg360article”:true,”c_partnername”:null,”c_partnerlogo”:”00000000-0000-0000-0000-000000000000″,”c_partnertag”:null},{“available”:true,”c_guid”:”78c22dc7-1521-4c74-ab6c-83fa0618eba8″,”c_author”:”hvg.hu”,”category”:”sport”,”description”:”A tavaly elhunyt olimpiai bajnok özvegye nem bírta fizetni a törlesztőrészleteket, ezért a Magyar Tornaszövetségtől és Orbán Viktor miniszterelnöktől is segítséget kért korábban.”,”shortLead”:”A tavaly elhunyt olimpiai bajnok özvegye nem bírta fizetni a törlesztőrészleteket, ezért a Magyar Tornaszövetségtől és…”,”id”:”20230110_Csollany_Szilveszter_csaladi_haz_arverezes_MOB”,”image”:”https://api.hvg.hu/Img/ffdb5e3a-e632-4abc-b367-3d9b3bb5573b/78c22dc7-1521-4c74-ab6c-83fa0618eba8.jpg”,”index”:0,”item”:”2bc4426f-9979-456a-8593-a10a4cf2131a”,”keywords”:null,”link”:”/sport/20230110_Csollany_Szilveszter_csaladi_haz_arverezes_MOB”,”timestamp”:”2023. január. 10. 11:14″,”title”:”A MOB szerint még nem árverezik Csollány Szilveszter családjának házát”,”trackingCode”:”RELATED”,”c_isbrandchannel”:false,”c_isbrandcontent”:false,”c_isbrandstory”:false,”c_isbrandcontentorbrandstory”:false,”c_isbranded”:false,”c_ishvg360article”:false,”c_partnername”:null,”c_partnerlogo”:”00000000-0000-0000-0000-000000000000″,”c_partnertag”:null}]

Order the weekly HVG newspaper or digitally and read us anywhere, anytime!

That’s why we ask you, our readers, to support us! We promise to keep doing the best we can!

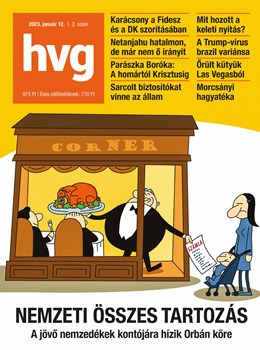

We recommend it from the first page