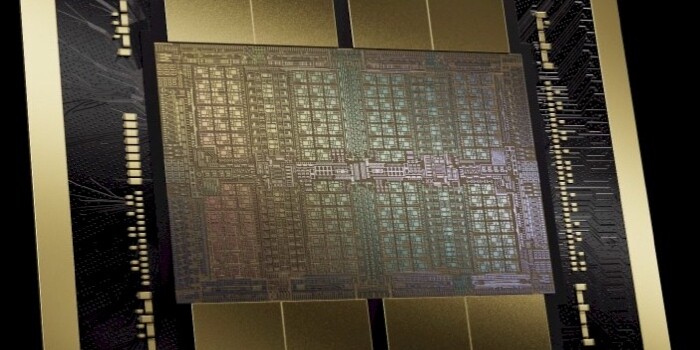

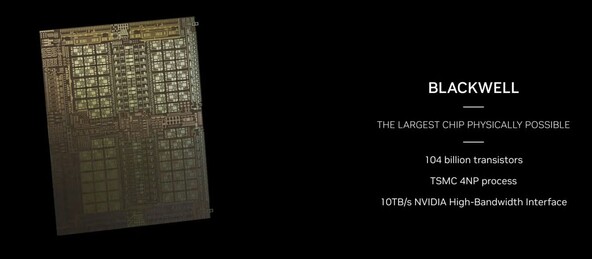

The B200's single GPU works at its maximum potential.

The star of the show was the design, codenamed B200, which is based on Blackwell engineering. The upgraded GPU is more focused on machine learning than ever before, but the company hasn't detailed exactly what's inside the 104 billion transistor, 858mm² chip made on TSMC's 4nm node. Obviously it will be replaced later, but in the meantime you'll have to make do with what you have, and based on the data provided, 80 multiprocessors have been added to the chip, with 96GB of HBM3E memory attached to it at 4096 bits.

The B200, which has a maximum consumption of 700W, has two GPUs inside its box, so the above data is already doubled, and the 10TB/s capable NV-HBI takes care of the communication. In total, you can count on 192 GB of memory, 160 multiprocessors, 40 PFLOPS with FP4, and 20 PFLOPS with FP6 and FP8, while connectivity to the system can be guaranteed by PCI Express 6.0.

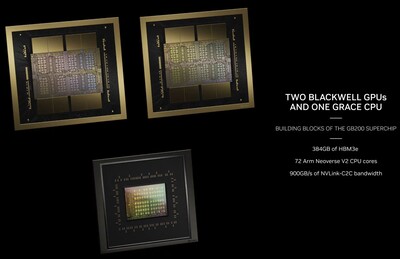

Next to the B200 comes the GB200, which is actually a pairing of two B200 machines with a Grace server processor. It's actually a platform of sorts, and NVIDIA also sells it as a DGX GB200 NVL72 rack server, which includes 36 Grace CPUs and 72 B200 GPUs in water-cooled form.

By the way, the new NVLink Switch chip in the DGX GB200 NVL72 works, which connects the GPUs. The chip in question contains 50 billion transistors and is also produced on TSMC's 4nm node.

Regarding exact availability, the company was not very talkative, but it is certain that key partners will get the devices this year.