A research team is working on a technique that can map the space in front of a person from light reflected from the eyeball. However, the end result is still far from perfect.

Interesting, and at the same time somewhat disturbing experiments are underway at the Research Center at the University of Maryland in the United States. A group of specialists there creates 3D images of a subject’s environment based on the reflections that appear in our eyes.

A study the team recently published with a very poetic name (approx. Seeing the World Through Your Eyes) [PDF] According to the procedure, it relies heavily on an artificial intelligence technology called Neural Radiance Fields (NeRF), which is used to reconstruct a site in three dimensions compared to conventional images. The raw materials for this process are obtained by decoding the light reflections that can be registered on the surface of the eye.

There is something, but not the real thing yet

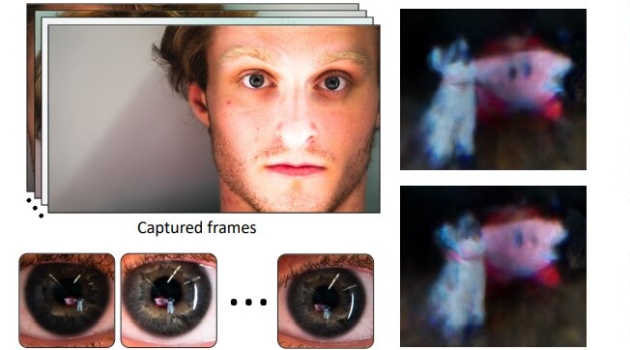

During laboratory experiments, many high-resolution recordings of people looking into the lens but moving according to certain instructions were taken from a fixed camera position. Reflections that could be separated from it were then tried to be cleaned of unwanted “noise” using various algorithm tricks. Disturbing elements caused by the position of the cornea and the texture of the iris are classified as such. Although the results obtained could not be subsumed within the locations of, say, a video game, it was essentially possible to reproduce objects placed in front of people (and carefully lit) in a recognizable way.

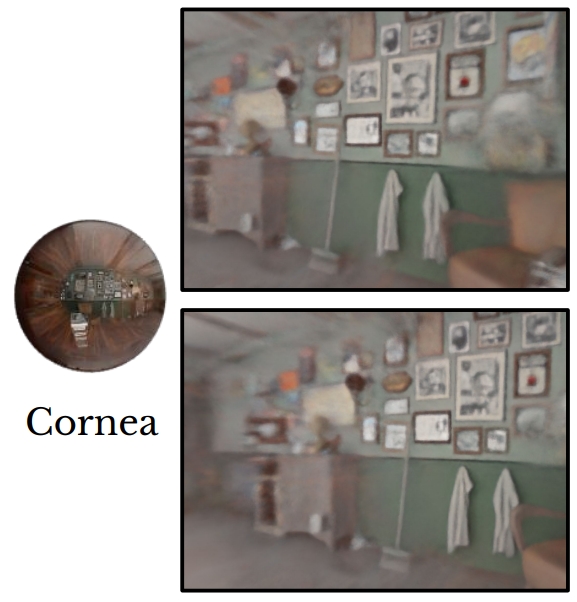

Much better images could have been created in relation to the artificial eye and the environment it could see virtually. The recordings made from these objects evoke a kind of hazy dream world, but the starting location and the objects found there stand out better.

An example of an image of an artificial eye and the resulting environment

By the way, the creators showed results not only in their scientific assessment, but also in A separate page is also devoted to the project, where the final product of several experimental setups can be shown in the form of animations. This also shows that the technique is not at all capable of producing acceptable results under uncontrolled conditions. For example, with the videos for Miley Cirus and Lady Gaga, the algorithm couldn’t do much, and even with the best of intentions you could at most guess an LED grille and camera tripod from the environment in front of the female artists while they were recording.

Forward to the bright future!

However, the researchers consider the work so far and stands as very promising. The team evaluated its results as a milestone, which others can rely on in the future when developing the technology further. Another issue is that the majority of people are probably fine for a long time without being able to assign their room based on a video phone call.