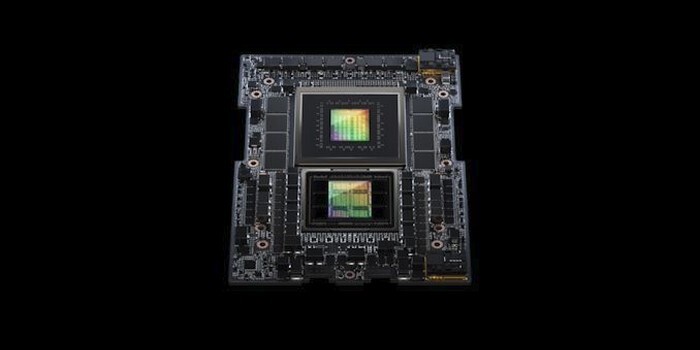

Thus, the new design provides more memory capacity and faster data transfer speeds.

At this year’s Computex, NVIDIA presented its development called the GH200, which we also reported in the news below, but the company modified the design and announced a replacement for the system with HBM3e memory.

As you can read in the article linked above, the GPU for the development of the GH200, connected via NVLINK, originally used HBM3 memory, the data transfer speed of which could be about 4TB/s. By replacing this type of memory with HBM3e, 5 TB / s is also available, and this is the essence of the new design. Moreover, the memory capacity also increases, specifically from 96GB to 144GB, which is useful when training larger neural networks.

The company hasn’t detailed other specs, but it’s likely the same as the original GH200, so the system’s Grace CPU has a 72-core ARM Neoverse V2 with 480GB of ECC support, about 512GB/s, in-company LPDDR5X memory, and a single Graphics processing connected via a memory coherent NVLINK interface, which ensures data transfer with a capacity of 900 GB / s, and uses 132 multiprocessors. The consumption of the entire building also remains between 450 and 1000 watts, a configurable parameter, and it is clear that the power demand greatly affects the available speed.

The Greens plan to introduce the HBM3e variant of the GH200 system in the second quarter of next year.